OpenShift Container Platform 3.7をシングルノードでインストールする

OpenShift 全部俺 Advent Calendar 2017

商用版のOpenShift Container Platform 3.7をシングルノードでインストールしてみます。

利用する/etc/ansible/hostsファイルの中身はこんな感じです。OpenShift Container Platform 3.6以前ではシングルマスター構成ではmaster-api, master-controllers, etcdが全部一つのプロセスmasterで動作する構成でしたが、管理性向上のため3.7ではこれは廃止され、マルチマスターと同一のプロセス構成になりました。

[OSEv3:children]

masters

etcd

nodes

[OSEv3:vars]

ansible_ssh_user=nekop

ansible_become=true

deployment_type=openshift-enterprise

openshift_master_identity_providers=[{'name': 'allow_all', 'login': 'true', 'challenge': 'true', 'kind': 'AllowAllPasswordIdentityProvider'}]

os_sdn_network_plugin_name=redhat/openshift-ovs-multitenant

openshift_node_kubelet_args={'kube-reserved': ['cpu=100m,memory=100Mi'], 'system-reserved': ['cpu=100m,memory=100Mi'], 'eviction-hard': [ 'memory.available<4%', 'nodefs.available<4%', 'nodefs.inodesFree<4%', 'imagefs.available<4%', 'imagefs.inodesFree<4%' ], 'eviction-soft': [ 'memory.available<8%', 'nodefs.available<8%', 'nodefs.inodesFree<8%', 'imagefs.available<8%', 'imagefs.inodesFree<8%' ], 'eviction-soft-grace-period': [ 'memory.available=1m30s', 'nodefs.available=1m30s', 'nodefs.inodesFree=1m30s', 'imagefs.available=1m30s', 'imagefs.inodesFree=1m30s' ]}

openshift_disable_check=memory_availability,disk_availability

openshift_master_default_subdomain=apps.example.com

[masters]

s37.example.com

[etcd]

s37.example.com

[nodes]

s37.example.com openshift_node_labels="{'region': 'infra'}" openshift_schedulable=true

rootでssh-copy-idしてあるstatic IPを持つRHEL 7.4 VMからスタートし、インストールの事前準備はこんな感じで流しています。dockerのstorage driverはoverlay2を指定します。

HOSTNAME=s37.example.com

RHSM_USERNAME=

RHSM_PASSWORD=

RHSM_POOLID=

OPENSHIFT_VERSION=3.7

hostnamectl set-hostname $HOSTNAME

timedatectl set-timezone Asia/Tokyo

adduser -G wheel nekop

cat << EOM > /etc/sudoers.d/wheel

%wheel ALL=(ALL) NOPASSWD: ALL

Defaults:%wheel !requiretty

EOM

mkdir /home/nekop/.ssh/

cp -a /root/.ssh/authorized_keys /home/nekop/.ssh/

chown nekop.nekop -R /home/nekop/.ssh/

subscription-manager register --username=$RHSM_USERNAME --password=$RHSM_PASSWORD

subscription-manager attach --pool $RHSM_POOLID

subscription-manager repos --disable=*

subscription-manager repos \

--enable=rhel-7-server-rpms \

--enable=rhel-7-server-extras-rpms \

--enable=rhel-7-server-optional-rpms \

--enable=rhel-7-server-rh-common-rpms \

--enable=rhel-7-fast-datapath-rpms \

--enable=rhel-7-server-ose-$OPENSHIFT_VERSION-rpms

yum update -y

yum install chrony wget git net-tools bind-utils iptables-services bridge-utils nfs-utils sos sysstat bash-completion lsof tcpdump yum-utils yum-cron docker atomic-openshift-utils -y

sed -i 's/DOCKER_STORAGE_OPTIONS=/DOCKER_STORAGE_OPTIONS="--storage-driver overlay2"/' /etc/sysconfig/docker-storage

systemctl enable chronyd docker

reboot

これでインストールの準備が整ったので、ログインしなおした後にssh keyを用意してssh-copy-idしてansibleします。問題なければ再度確認も兼ねて一応rebootするようにしています。

ssh nekop@s37.example.com ssh-keygen ssh-copy-id s37.example.com vi /etc/ansible/hosts ansible-playbook -vvvv /usr/share/ansible/openshift-ansible/playbooks/byo/config.yml | tee -a openshift-install-$(date +%Y%m%d%H%M%S).log reboot

マルチノードの場合はglusterも一緒にセットアップできるのでglusterでPVを使えるようにするのがラクなんですが、シングルノードなのでhostPathでPVを作成しておきます。SELinuxでコンテナからのアクセスを許可するのにchconでsvirt_sandbox_file_tを設定します。

COUNT=100

sudo mkdir -p /var/lib/origin/openshift.local.pv/

for i in $(seq 1 $COUNT); do

PVNAME=pv$(printf %04d $i)

sudo mkdir -p /var/lib/origin/openshift.local.pv/$PVNAME

cat <<EOF | oc create -f -

apiVersion: v1

kind: PersistentVolume

metadata:

name: $PVNAME

spec:

accessModes:

- ReadWriteOnce

- ReadWriteMany

- ReadOnlyMany

capacity:

storage: 100Gi

persistentVolumeReclaimPolicy: Retain

hostPath:

path: /var/lib/origin/openshift.local.pv/$PVNAME

EOF

done

sudo chcon -R -t svirt_sandbox_file_t /var/lib/origin/openshift.local.pv/

sudo chmod -R 777 /var/lib/origin/openshift.local.pv/

Reference

Red Hat OpenShift Application Runtimes

OpenShift 全部俺 Advent Calendar 2017

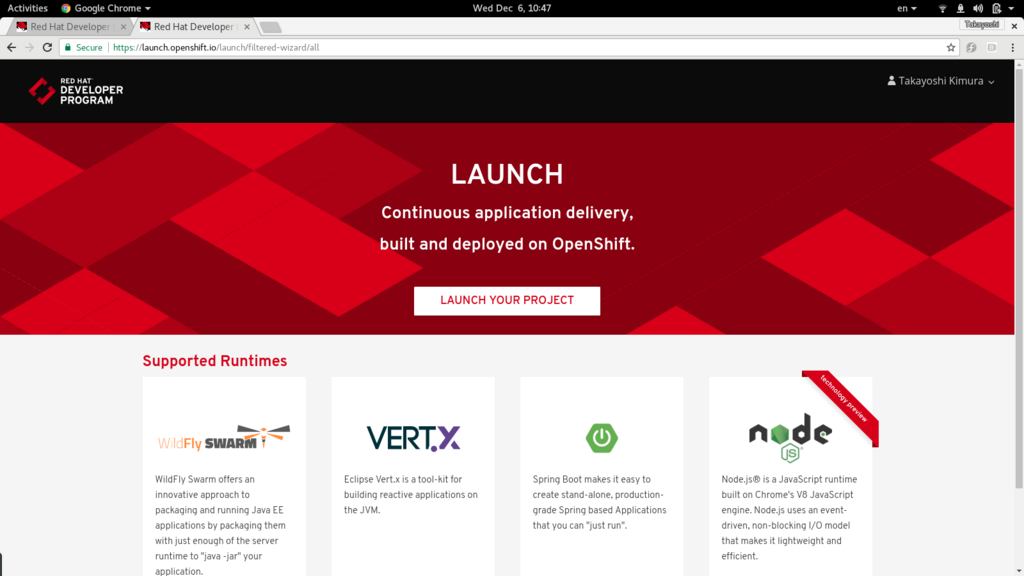

Red Hat OpenShift Application Runtimesがリリースされたのでさわってみたいと思います。Spring BootやVert.x, WildFly Swarmなどを使ってOpenShift上でマイクロサービスアプリケーションの開発をサポートする製品です。

https://launch.openshift.io/ というサイトでプロジェクトのひな型が作成できるので、まずはそれを利用してWildFly Swarmのプロジェクトを見てみましょう。

生成されたプロジェクトはローカル開発とOpenShiftを利用した開発の両方に対応するfabric8を活用したプロジェクトのようです。oc get allを見てみると、Binaryビルドなので、ソースからOpenShift側でビルドされるのではなく、ローカルでビルドしたwarファイルを転送してコンテナイメージをビルドしている構成です。

$ mvn clean fabric8:deploy (長いので省略) $ oc get all NAME TYPE FROM LATEST buildconfigs/booster-crud-wildfly-swarm-s2i Source Binary 1 NAME TYPE FROM STATUS STARTED DURATION builds/booster-crud-wildfly-swarm-s2i-1 Source Binary Complete 2 minutes ago 2m3s NAME DOCKER REPO TAGS UPDATED imagestreams/booster-crud-wildfly-swarm 172.30.1.1:5000/test-rhoar/booster-crud-wildfly-swarm latest 50 seconds ago NAME REVISION DESIRED CURRENT TRIGGERED BY deploymentconfigs/booster-crud-wildfly-swarm 1 1 1 config,image(booster-crud-wildfly-swarm:latest) NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD routes/booster-crud-wildfly-swarm booster-crud-wildfly-swarm-test-rhoar.192.168.42.143.nip.io booster-crud-wildfly-swarm 8080 None NAME READY STATUS RESTARTS AGE po/booster-crud-wildfly-swarm-1-deploy 1/1 Running 0 18s po/booster-crud-wildfly-swarm-1-fgdj8 0/1 Running 0 15s po/booster-crud-wildfly-swarm-s2i-1-build 0/1 Completed 0 2m NAME DESIRED CURRENT READY AGE rc/booster-crud-wildfly-swarm-1 1 1 0 18s NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE svc/booster-crud-wildfly-swarm 172.30.79.11 <none> 8080/TCP 20s

BuildConfigを見ると利用イメージがregistry.access.redhat.com/redhat-openjdk-18/openjdk18-openshiftになっているので、これを使えば手元でビルドしてバイナリ転送ではなく、OpenShift側でソースからビルド(s2iビルド)でも大丈夫でしょう。

というわけでRHOARを利用した最小のSwarmプロジェクト https://github.com/nekop/hello-swarm を作ってみました。

$ oc new-project test-swarm $ oc new-app registry.access.redhat.com/redhat-openjdk-18/openjdk18-openshift~https://github.com/nekop/hello-swarm $ oc expose svc hello-swarm $ curl hello-swarm-test-rhoar.192.168.42.143.nip.io hello world

OpenShiftのocコマンドの基本

OpenShiftを利用するにあたって、最初に覚えておいたほうが良いいくつかのコマンドがあります。例えばoc get allとoc get eventです。うまくいかないときなどは大抵この2つを参照することになります。

ocコマンドはヘルプが充実しているので、わからないことがあったらまず-hオプションを付与するクセをつけましょう。oc -hの出力を末尾にのせておきます。

利用頻度順にいくつか紹介します。

oc get all oc get event oc logs POD_NAME oc rsh POD_NAME oc debug DC_NAME oc explain

oc get all

とりあえず状況を見るのに便利なのがoc get allです。-o wideオプションを付けるとノード名表示などちょっと情報が増えるので基本的につけたほうが良いです。

$ oc get all -o wide NAME TYPE FROM LATEST buildconfigs/hello-sinatra Source Git 1 NAME TYPE FROM STATUS STARTED DURATION builds/hello-sinatra-1 Source Git@cf28c79 Complete 4 days ago 1m16s NAME DOCKER REPO TAGS UPDATED imagestreams/hello-sinatra 172.30.1.1:5000/test-ruby/hello-sinatra latest 4 days ago NAME REVISION DESIRED CURRENT TRIGGERED BY deploymentconfigs/hello-sinatra 1 1 1 config,image(hello-sinatra:latest) NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD routes/hello-sinatra hello-sinatra-test-ruby.192.168.42.225.xip.io hello-sinatra 8080-tcp None NAME READY STATUS RESTARTS AGE IP NODE po/hello-sinatra-1-build 0/1 Completed 0 4d 172.17.0.2 localhost po/hello-sinatra-1-jxqm5 1/1 Running 1 4d 172.17.0.3 localhost NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR rc/hello-sinatra-1 1 1 1 4d hello-sinatra 172.30.1.1:5000/test-ruby/hello-sinatra@sha256:623df61eea9fb550df241a2cd8e909b44fd2fb69a4d82298f7221f5c92488167 app=hello-sinatra,deployment=hello-sinatra-1,deploymentconfig=hello-sinatra NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR svc/hello-sinatra 172.30.168.102 <none> 8080/TCP 4d app=hello-sinatra,deploymentconfig=hello-sinatra

oc get all -o yamlを実行するとyaml形式で全ての情報をダンプします。バックアップ目的や詳細まで全てチェックする目的で取得することが多いですが、量が多いので基本的にはファイルにリダイレクトして保存して参照することになるでしょう。

また、ocに--loglevel=7というオプションを付与することによりREST APIコールのログを全て見ることができます。たとえばoc get allは以下のAPIの情報を参照しています。

$ oc get all --loglevel=7 2>&1 | grep http I1205 16:23:49.320760 1645 round_trippers.go:383] GET https://192.168.42.225:8443/oapi/v1/namespaces/test-ruby/buildconfigs I1205 16:23:49.335285 1645 round_trippers.go:383] GET https://192.168.42.225:8443/oapi/v1/namespaces/test-ruby/builds I1205 16:23:49.337681 1645 round_trippers.go:383] GET https://192.168.42.225:8443/oapi/v1/namespaces/test-ruby/imagestreams I1205 16:23:49.339604 1645 round_trippers.go:383] GET https://192.168.42.225:8443/oapi/v1/namespaces/test-ruby/deploymentconfigs I1205 16:23:49.341773 1645 round_trippers.go:383] GET https://192.168.42.225:8443/oapi/v1/namespaces/test-ruby/routes I1205 16:23:49.343972 1645 round_trippers.go:383] GET https://192.168.42.225:8443/api/v1/namespaces/test-ruby/pods I1205 16:23:49.350071 1645 round_trippers.go:383] GET https://192.168.42.225:8443/api/v1/namespaces/test-ruby/replicationcontrollers I1205 16:23:49.352935 1645 round_trippers.go:383] GET https://192.168.42.225:8443/api/v1/namespaces/test-ruby/services I1205 16:23:49.355540 1645 round_trippers.go:383] GET https://192.168.42.225:8443/apis/apps/v1beta1/namespaces/test-ruby/statefulsets I1205 16:23:49.357334 1645 round_trippers.go:383] GET https://192.168.42.225:8443/apis/autoscaling/v1/namespaces/test-ruby/horizontalpodautoscalers I1205 16:23:49.359109 1645 round_trippers.go:383] GET https://192.168.42.225:8443/apis/batch/v1/namespaces/test-ruby/jobs I1205 16:23:49.360826 1645 round_trippers.go:383] GET https://192.168.42.225:8443/apis/batch/v2alpha1/namespaces/test-ruby/cronjobs I1205 16:23:49.362590 1645 round_trippers.go:383] GET https://192.168.42.225:8443/apis/extensions/v1beta1/namespaces/test-ruby/deployments I1205 16:23:49.364211 1645 round_trippers.go:383] GET https://192.168.42.225:8443/apis/extensions/v1beta1/namespaces/test-ruby/replicasets

oc get event

イベントログを参照します。デプロイがうまくいかない、といった問題のヒントや原因などはほぼ全てイベントログに出力されています。CLI上でデプロイの試行錯誤をしているようなときはwatchオプション-wを利用して、常にログを出すようにしていると状況がわかりやすいです。

oc get event -w &

oc logs POD_NAME

コンテナのログを参照します。--timestampsオプションを付与するとコンテナがログにタイムスタンプを付与するかどうかにかかわらず一律でログのタイムスタンプを一緒に表示してくれます。

CrashLoop中のpodなどに対しては-pオプションを指定することで、前回起動時のログを参照できます。

oc logs --timestamps POD_NAME oc logs -p --timestamps POD_NAME

oc rsh POD_NAME

podの中のシェルに入ることができます。コンテナ内での調査に利用します。

oc rsh POD_NAME

oc debug DC_NAME

debug用のpodを立ち上げます。コンテナイメージのEntrypointやCmdで指定されているコマンドは実行されず、シェルに入ることができるので、EntrypointやCmdで指定されているコマンドが即異常終了してしまう、といったoc rshでは調査の難しい問題に利用できます。

oc debug dc/hello-sinatra

oc explain RESOURCE_TYPE

各リソースの説明を表示してくれます。dc.spec.templateなど各リソースのyamlの構造に合わせて指定していくことで、設定項目名が思い出せない場合や、どのような設定が可能なのかといったことを調べることができます。

$ oc explain dc.spec.template

RESOURCE: template <Object>

DESCRIPTION:

Template is the object that describes the pod that will be created if

insufficient replicas are detected.

PodTemplateSpec describes the data a pod should have when created from a template

FIELDS:

metadata <Object>

Standard object's metadata. More info:

https://git.k8s.io/community/contributors/devel/api-conventions.md#metadata

spec <Object>

Specification of the desired behavior of the pod. More info: https://

git.k8s.io/community/contributors/devel/api-conventions.md#spec-and-status/

ヘルプ

ocコマンドはヘルプが充実しています。各サブコマンドも-hオプションを付与することでヘルプが出力されます。

$ oc -h OpenShift Client This client helps you develop, build, deploy, and run your applications on any OpenShift or Kubernetes compatible platform. It also includes the administrative commands for managing a cluster under the 'adm' subcommand. Basic Commands: types An introduction to concepts and types login Log in to a server new-project Request a new project new-app Create a new application status Show an overview of the current project project Switch to another project projects Display existing projects explain Documentation of resources cluster Start and stop OpenShift cluster Build and Deploy Commands: rollout Manage a Kubernetes deployment or OpenShift deployment config rollback Revert part of an application back to a previous deployment new-build Create a new build configuration start-build Start a new build cancel-build Cancel running, pending, or new builds import-image Imports images from a Docker registry tag Tag existing images into image streams Application Management Commands: get Display one or many resources describe Show details of a specific resource or group of resources edit Edit a resource on the server set Commands that help set specific features on objects label Update the labels on a resource annotate Update the annotations on a resource expose Expose a replicated application as a service or route delete Delete one or more resources scale Change the number of pods in a deployment autoscale Autoscale a deployment config, deployment, replication controller, or replica set secrets Manage secrets serviceaccounts Manage service accounts in your project Troubleshooting and Debugging Commands: logs Print the logs for a resource rsh Start a shell session in a pod rsync Copy files between local filesystem and a pod port-forward Forward one or more local ports to a pod debug Launch a new instance of a pod for debugging exec Execute a command in a container proxy Run a proxy to the Kubernetes API server attach Attach to a running container run Run a particular image on the cluster cp Copy files and directories to and from containers. Advanced Commands: adm Tools for managing a cluster create Create a resource by filename or stdin replace Replace a resource by filename or stdin apply Apply a configuration to a resource by filename or stdin patch Update field(s) of a resource using strategic merge patch process Process a template into list of resources export Export resources so they can be used elsewhere extract Extract secrets or config maps to disk idle Idle scalable resources observe Observe changes to resources and react to them (experimental) policy Manage authorization policy auth Inspect authorization convert Convert config files between different API versions import Commands that import applications image Useful commands for managing images Settings Commands: logout End the current server session config Change configuration files for the client whoami Return information about the current session completion Output shell completion code for the specified shell (bash or zsh) Other Commands: help Help about any command plugin Runs a command-line plugin version Display client and server versions Use "oc <command> --help" for more information about a given command. Use "oc options" for a list of global command-line options (applies to all commands).

OpenShiftの基本的なソースからのビルドとデプロイでアプリケーションを動かす

OpenShift 全部俺 Advent Calendar 2017

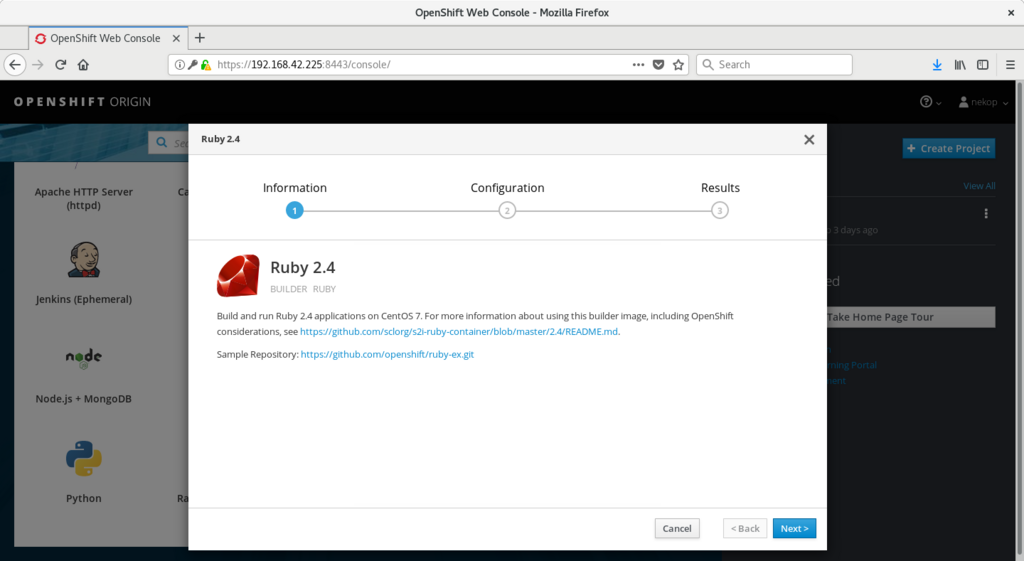

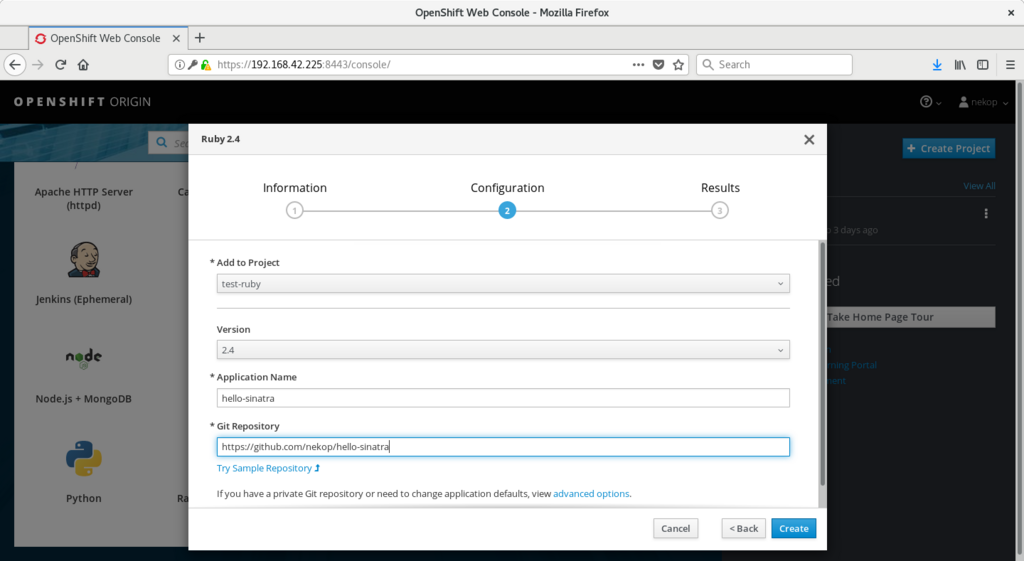

minishiftで環境ができたので、アプリケーションをビルドして動作させてみましょう。使うのはソースからコンテナイメージをビルドするs2i (source to image)と呼ばれるビルド方式です。

初期状態ではdeveloperユーザ、myprojectというプロジェクトとなっています。この情報は~/.kube/configファイルに格納されています。

$ oc whoami developer $ oc status In project My Project (myproject) on server https://192.168.42.225:8443 You have no services, deployment configs, or build configs. Run 'oc new-app' to create an application.

myprojectみたいなデフォルトのものを使うのは後から使い分けしようとしたときに困ることが多く、あまり好きではないので、別ユーザ別プロジェクトを作成します。

nekopというユーザ、アプリケーションはRubyのものを使いますので、test-rubyというプロジェクトを作成します。minishiftではAllowAllという認証設定がされているので、ユーザ名は任意、パスワードに空文字以外で全て通るようになっています。

$ oc login -u nekop

Authentication required for https://192.168.42.225:8443 (openshift)

Username: nekop

Password:

Login successful.

You don't have any projects. You can try to create a new project, by running

oc new-project <projectname>

$ oc new-project test-ruby

Now using project "test-ruby" on server "https://192.168.42.225:8443".

You can add applications to this project with the 'new-app' command. For example, try:

oc new-app centos/ruby-22-centos7~https://github.com/openshift/ruby-ex.git

to build a new example application in Ruby.

アプリケーションのソースコードを指定してoc new-appを発行します。SinatraというRubyのWebフレームワークで書いたHello Worldのアプリ https://github.com/nekop/hello-sinatra を指定しています。

$ oc new-app https://github.com/nekop/hello-sinatra

--> Found image a6f8569 (10 days old) in image stream "openshift/ruby" under tag "2.4" for "ruby"

Ruby 2.4

--------

Ruby 2.4 available as docker container is a base platform for building and running various Ruby 2.4 applications and frameworks. Ruby is the interpreted scripting language for quick and easy object-oriented programming. It has many features to process text files and to do system management tasks (as in Perl). It is simple, straight-forward, and extensible.

Tags: builder, ruby, ruby24, rh-ruby24

* The source repository appears to match: ruby

* A source build using source code from https://github.com/nekop/hello-sinatra will be created

* The resulting image will be pushed to image stream "hello-sinatra:latest"

* Use 'start-build' to trigger a new build

* This image will be deployed in deployment config "hello-sinatra"

* Port 8080/tcp will be load balanced by service "hello-sinatra"

* Other containers can access this service through the hostname "hello-sinatra"

--> Creating resources ...

imagestream "hello-sinatra" created

buildconfig "hello-sinatra" created

deploymentconfig "hello-sinatra" created

service "hello-sinatra" created

--> Success

Build scheduled, use 'oc logs -f bc/hello-sinatra' to track its progress.

Application is not exposed. You can expose services to the outside world by executing one or more of the commands below:

'oc expose svc/hello-sinatra'

Run 'oc status' to view your app.

openshift/ruby:2.4というイメージを利用してアプリケーションがビルドされています。

状況を確認するコマンドはいろいろあります。oc status, oc get all, oc logs, oc get eventなどです。細かい説明は後日やります。たぶん。

$ oc status

In project test-ruby on server https://192.168.42.225:8443

svc/hello-sinatra - 172.30.168.102:8080

dc/hello-sinatra deploys istag/hello-sinatra:latest <-

bc/hello-sinatra source builds https://github.com/nekop/hello-sinatra on openshift/ruby:2.4

build #1 running for 22 seconds - cf28c79: Add index.html (Takayoshi Kimura <takayoshi@gmail.com>)

deployment #1 waiting on image or update

View details with 'oc describe <resource>/<name>' or list everything with 'oc get all'.

$ oc get all

NAME TYPE FROM LATEST

buildconfigs/hello-sinatra Source Git 1

NAME TYPE FROM STATUS STARTED DURATION

builds/hello-sinatra-1 Source Git@cf28c79 Running 48 seconds ago

NAME DOCKER REPO TAGS UPDATED

imagestreams/hello-sinatra 172.30.1.1:5000/test-ruby/hello-sinatra

NAME REVISION DESIRED CURRENT TRIGGERED BY

deploymentconfigs/hello-sinatra 0 1 0 config,image(hello-sinatra:latest)

NAME READY STATUS RESTARTS AGE

po/hello-sinatra-1-build 1/1 Running 0 48s

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/hello-sinatra 172.30.168.102 <none> 8080/TCP 48s

$ oc logs hello-sinatra-1-build -f

---> Installing application source ...

---> Building your Ruby application from source ...

---> Running 'bundle install --deployment --without development:test' ...

Fetching gem metadata from https://rubygems.org/.........

Fetching version metadata from https://rubygems.org/.

Installing rake 11.1.2

Installing power_assert 0.2.7

Installing rack 1.6.4

Installing tilt 2.0.2

Using bundler 1.13.7

Installing test-unit 3.1.8

Installing rack-protection 1.5.3

Installing rack-test 0.6.3

Installing sinatra 1.4.7

Bundle complete! 4 Gemfile dependencies, 9 gems now installed.

Gems in the groups development and test were not installed.

Bundled gems are installed into ./bundle.

---> Cleaning up unused ruby gems ...

Running `bundle clean --verbose` with bundler 1.13.7

Found no changes, using resolution from the lockfile

/opt/app-root/src/bundle/ruby/2.4.0/gems/rake-11.1.2/lib/rake/ext/fixnum.rb:4: warning: constant ::Fixnum is deprecated

Pushing image 172.30.1.1:5000/test-ruby/hello-sinatra:latest ...

Pushed 0/10 layers, 0% complete

Pushed 1/10 layers, 14% complete

Pushed 2/10 layers, 25% complete

Pushed 3/10 layers, 31% complete

Pushed 4/10 layers, 43% complete

Pushed 5/10 layers, 53% complete

Pushed 6/10 layers, 65% complete

Pushed 7/10 layers, 75% complete

Pushed 8/10 layers, 84% complete

Pushed 9/10 layers, 99% complete

Pushed 10/10 layers, 100% complete

Push successful

$ oc get event

LASTSEEN FIRSTSEEN COUNT NAME KIND SUBOBJECT TYPE REASON SOURCE MESSAGE

2m 2m 1 hello-sinatra-1-build Pod Normal Scheduled default-scheduler Successfully assigned hello-sinatra-1-build to localhost

2m 2m 1 hello-sinatra-1-build Pod Normal SuccessfulMountVolume kubelet, localhost MountVolume.SetUp succeeded for volume "crio-socket"

2m 2m 1 hello-sinatra-1-build Pod Normal SuccessfulMountVolume kubelet, localhost MountVolume.SetUp succeeded for volume "buildworkdir"

2m 2m 1 hello-sinatra-1-build Pod Normal SuccessfulMountVolume kubelet, localhost MountVolume.SetUp succeeded for volume "docker-socket"

2m 2m 1 hello-sinatra-1-build Pod Normal SuccessfulMountVolume kubelet, localhost MountVolume.SetUp succeeded for volume "builder-dockercfg-2g5jw-push"

2m 2m 1 hello-sinatra-1-build Pod Normal SuccessfulMountVolume kubelet, localhost MountVolume.SetUp succeeded for volume "builder-token-q8h4n"

2m 2m 1 hello-sinatra-1-build Pod spec.initContainers{git-clone} Normal Pulling kubelet, localhost pulling image "openshift/origin-sti-builder:v3.7.0"

2m 2m 1 hello-sinatra-1-build Pod spec.initContainers{git-clone} Normal Pulled kubelet, localhost Successfully pulled image "openshift/origin-sti-builder:v3.7.0"

2m 2m 1 hello-sinatra-1-build Pod spec.initContainers{git-clone} Normal Created kubelet, localhost Created container

2m 2m 1 hello-sinatra-1-build Pod spec.initContainers{git-clone} Normal Started kubelet, localhost Started container

2m 2m 1 hello-sinatra-1-build Pod spec.initContainers{manage-dockerfile} Normal Pulled kubelet, localhost Container image "openshift/origin-sti-builder:v3.7.0" already present on machine

2m 2m 1 hello-sinatra-1-build Pod spec.initContainers{manage-dockerfile} Normal Created kubelet, localhost Created container

2m 2m 1 hello-sinatra-1-build Pod spec.initContainers{manage-dockerfile} Normal Started kubelet, localhost Started container

2m 2m 1 hello-sinatra-1-build Pod spec.containers{sti-build} Normal Pulled kubelet, localhost Container image "openshift/origin-sti-builder:v3.7.0" already present on machine

2m 2m 1 hello-sinatra-1-build Pod spec.containers{sti-build} Normal Created kubelet, localhost Created container

2m 2m 1 hello-sinatra-1-build Pod spec.containers{sti-build} Normal Started kubelet, localhost Started container

1m 1m 1 hello-sinatra-1-deploy Pod Normal Scheduled default-scheduler Successfully assigned hello-sinatra-1-deploy to localhost

1m 1m 1 hello-sinatra-1-deploy Pod Normal SuccessfulMountVolume kubelet, localhost MountVolume.SetUp succeeded for volume "deployer-token-82bmt"

1m 1m 1 hello-sinatra-1-deploy Pod spec.containers{deployment} Normal Pulled kubelet, localhost Container image "openshift/origin-deployer:v3.7.0" already present on machine

1m 1m 1 hello-sinatra-1-deploy Pod spec.containers{deployment} Normal Created kubelet, localhost Created container

1m 1m 1 hello-sinatra-1-deploy Pod spec.containers{deployment} Normal Started kubelet, localhost Started container

1m 1m 1 hello-sinatra-1-deploy Pod spec.containers{deployment} Normal Killing kubelet, localhost Killing container with id docker://deployment:Need to kill Pod

1m 1m 1 hello-sinatra-1-jxqm5 Pod Normal Scheduled default-scheduler Successfully assigned hello-sinatra-1-jxqm5 to localhost

1m 1m 1 hello-sinatra-1-jxqm5 Pod Normal SuccessfulMountVolume kubelet, localhost MountVolume.SetUp succeeded for volume "default-token-fhb4l"

1m 1m 1 hello-sinatra-1-jxqm5 Pod spec.containers{hello-sinatra} Normal Pulling kubelet, localhost pulling image "172.30.1.1:5000/test-ruby/hello-sinatra@sha256:623df61eea9fb550df241a2cd8e909b44fd2fb69a4d82298f7221f5c92488167"

1m 1m 1 hello-sinatra-1-jxqm5 Pod spec.containers{hello-sinatra} Normal Pulled kubelet, localhost Successfully pulled image "172.30.1.1:5000/test-ruby/hello-sinatra@sha256:623df61eea9fb550df241a2cd8e909b44fd2fb69a4d82298f7221f5c92488167"

1m 1m 1 hello-sinatra-1-jxqm5 Pod spec.containers{hello-sinatra} Normal Created kubelet, localhost Created container

1m 1m 1 hello-sinatra-1-jxqm5 Pod spec.containers{hello-sinatra} Normal Started kubelet, localhost Started container

2m 2m 1 hello-sinatra-1 Build Normal BuildStarted build-controller Build test-ruby/hello-sinatra-1 is now running

1m 1m 1 hello-sinatra-1 Build Normal BuildCompleted build-controller Build test-ruby/hello-sinatra-1 completed successfully

1m 1m 1 hello-sinatra-1 ReplicationController Normal SuccessfulCreate replication-controller Created pod: hello-sinatra-1-jxqm5

2m 2m 1 hello-sinatra BuildConfig Warning BuildConfigTriggerFailed buildconfig-controller error triggering Build for BuildConfig test-ruby/hello-sinatra: Internal error occurred: build config test-ruby/hello-sinatra has already instantiated a build for imageid centos/ruby-24-centos7@sha256:905b51254a455c716892314ef3a029a4b9a4b1e832a9a1518783577f115956fd

1m 1m 1 hello-sinatra DeploymentConfig Normal DeploymentCreated deploymentconfig-controller Created new replication controller "hello-sinatra-1" for version 1

$ oc get pod

NAME READY STATUS RESTARTS AGE

hello-sinatra-1-build 0/1 Completed 0 1m

hello-sinatra-1-jxqm5 1/1 Running 0 25s

最後の出力を見るとアプリケーションのpodがRunningになっているので正常にデプロイされているようです。

oc new-appコマンドでは外部アクセス用のRouteは自動的に作成されないので、作成してアクセスしてみます。

$ oc expose svc hello-sinatra route "hello-sinatra" exposed $ oc get route NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD hello-sinatra hello-sinatra-test-ruby.192.168.42.225.nip.io hello-sinatra 8080-tcp None $ curl hello-sinatra-test-ruby.192.168.42.225.nip.io curl: (6) Could not resolve host: hello-sinatra-test-ruby.192.168.42.225.nip.io

あれれ、エラーになりましたね。どうやらminishiftがデフォルトで利用するDNSサービスの nip.io が落ちているようです。とりあえずは同種のサービスである xip.io に変更してみましょう。

$ oc delete route hello-sinatra route "hello-sinatra" deleted $ oc expose svc hello-sinatra --hostname hello-sinatra-test-ruby.192.168.42.225.xip.io route "hello-sinatra" exposed $ oc get route NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD hello-sinatra hello-sinatra-test-ruby.192.168.42.225.xip.io hello-sinatra 8080-tcp None $ curl hello-sinatra-test-ruby.192.168.42.225.xip.io hello

きちんとアプリケーションからhelloが返却されました。

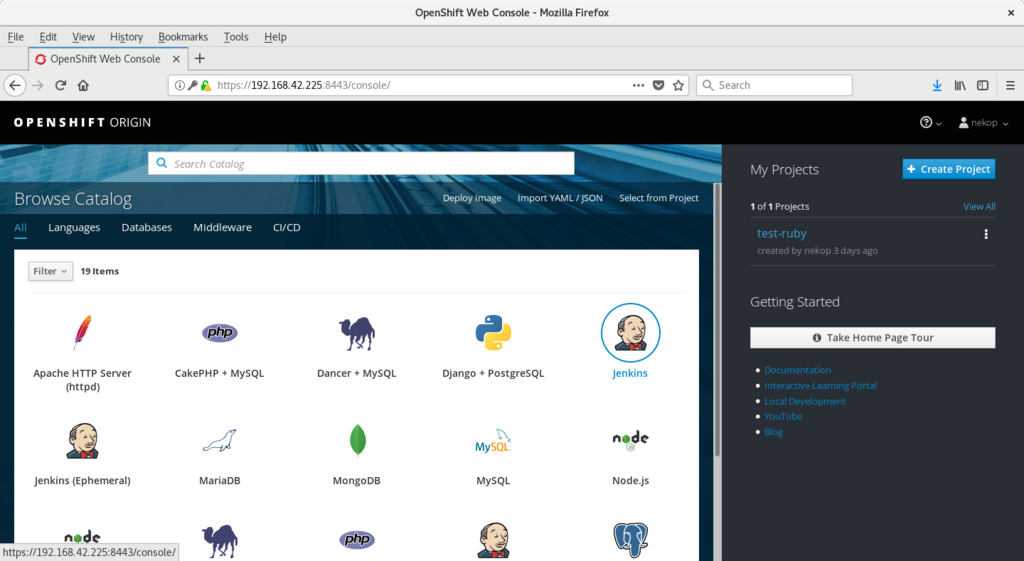

一連の操作はWebコンソール上のGUIでも可能です。minishift consoleコマンドでブラウザ上でWebコンソールが開くようになっています。

minishiftで作られたVMの中身を見てみる

OpenShift 全部俺 Advent Calendar 2017

minishiftのようなツールは魔法のようにセットアップされてうれしい反面、何かあったときにVMの中身がどうなっているのかわからないとやっかいなことがあります。とりあえず解剖します。

VMはふつうにvirshで見えますし、minishift statusというコマンドでも確認できます。

$ sudo virsh list Id Name State ---------------------------------------------------- 7 minishift running $ minishift status Minishift: Running Profile: minishift OpenShift: Running (openshift v3.7.0+7ed6862) DiskUsage: 5% of 37G

minishift sshでVMの中に入ることができ、dockerというユーザ名のシェルとなります。恐らくsudoとdockerコマンドがそのまま叩ける設定だろうなと予想して実行すると、案の定実行できます。

$ minishift ssh Last login: Thu Nov 30 03:01:36 2017 from 192.168.42.1 [docker@minishift ~]$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 3803a0f6fca1 docker.io/openshift/origin-haproxy-router@sha256:9fe2b3b8916b89fbabbd0c798655da6c808156b3c1f473bb8341bf64c6c560a5 "/usr/bin/openshift-r" 40 minutes ago Up 40 minutes k8s_router_router-1-bsnsw_default_3d2ee8f1-d5a0-11e7-8594-525400413df4_0 b9f2f6e2ae9b docker.io/openshift/origin-docker-registry@sha256:9230bd859ce5a7fad13dc676efae72b2c86a9a0177cbd26db9b047be28620143 "/bin/sh -c '/usr/bin" 40 minutes ago Up 40 minutes k8s_registry_docker-registry-1-mk854_default_3b772e27-d5a0-11e7-8594-525400413df4_0 239e4eb40535 openshift/origin-pod:v3.7.0 "/usr/bin/pod" 41 minutes ago Up 41 minutes k8s_POD_router-1-bsnsw_default_3d2ee8f1-d5a0-11e7-8594-525400413df4_0 8353bfb440fa openshift/origin-pod:v3.7.0 "/usr/bin/pod" 41 minutes ago Up 41 minutes k8s_POD_docker-registry-1-mk854_default_3b772e27-d5a0-11e7-8594-525400413df4_0 7322dc424f4b openshift/origin:v3.7.0 "/usr/bin/openshift s" 41 minutes ago Up 9 minutes origin [docker@minishift ~]$ docker images REPOSITORY TAG IMAGE ID CREATED SIZE docker.io/openshift/origin-haproxy-router v3.7.0 b62f18316ed4 15 hours ago 1.121 GB docker.io/openshift/origin-deployer v3.7.0 abeb2913cd05 15 hours ago 1.099 GB docker.io/openshift/origin v3.7.0 7ddd42ca061a 15 hours ago 1.099 GB docker.io/openshift/origin-docker-registry v3.7.0 359f3779b58f 15 hours ago 500.7 MB docker.io/openshift/origin-pod v3.7.0 73b7557fbb3a 15 hours ago 218.4 MB [docker@minishift ~]$ sudo ls -la /root total 36 dr-xr-x---. 2 root root 4096 Nov 30 02:59 . dr-xr-xr-x. 16 root root 4096 Nov 30 02:27 .. -rw-------. 1 root root 39 Nov 30 02:59 .bash_history -rw-r--r--. 1 root root 18 Dec 28 2013 .bash_logout -rw-r--r--. 1 root root 176 Dec 28 2013 .bash_profile -rw-r--r--. 1 root root 176 Dec 28 2013 .bashrc -rw-r--r--. 1 root root 100 Dec 28 2013 .cshrc -rw-------. 1 root root 1024 Nov 30 02:27 .rnd -rw-r--r--. 1 root root 129 Dec 28 2013 .tcshrc

docker psの出力からはOpenShift本体であるoriginコンテナ、docker-reigstryとrouter podが確認できます。

openshiftコマンドやocコマンドはminishiftのVM内には存在していないみたいです。docker exec originでOpenShiftのコンテナ上で実行するとOpenShift管理者システムユーザsystem:adminで実行されることが確認できます。minishiftのデータは/var/lib/minishift/配下にあるようです。あとはセットアップ中に利用されるであろうコマンドがいくつか/usr/local/binにあります。

[docker@minishift ~]$ which openshift oc /usr/bin/which: no openshift in (/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/sbin:/home/docker/.local/bin:/home/docker/bin) /usr/bin/which: no oc in (/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/sbin:/home/docker/.local/bin:/home/docker/bin) [docker@minishift ~]$ sudo find / -type f -name openshift [docker@minishift ~]$ sudo find / -type f -name oc [docker@minishift ~]$ docker exec origin oc whoami system:admin [docker@minishift ~]$ docker exec origin oc get all NAME REVISION DESIRED CURRENT TRIGGERED BY deploymentconfigs/docker-registry 1 1 1 config deploymentconfigs/router 1 1 1 config NAME READY STATUS RESTARTS AGE po/docker-registry-1-mk854 1/1 Running 0 45m po/persistent-volume-setup-s5kcv 0/1 Completed 0 46m po/router-1-bsnsw 1/1 Running 0 45m NAME DESIRED CURRENT READY AGE rc/docker-registry-1 1 1 1 46m rc/router-1 1 1 1 46m NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE svc/docker-registry 172.30.1.1 <none> 5000/TCP 46m svc/kubernetes 172.30.0.1 <none> 443/TCP,53/UDP,53/TCP 46m svc/router 172.30.254.47 <none> 80/TCP,443/TCP,1936/TCP 46m NAME DESIRED SUCCESSFUL AGE jobs/persistent-volume-setup 1 1 46m [docker@minishift ~]$ sudo ls -la /var/lib/minishift/ total 24 drwxr-xr-x. 6 root root 4096 Nov 30 02:27 . drwxr-xr-x. 28 root root 4096 Nov 30 02:27 .. drwxr-xr-x. 3 root root 4096 Nov 30 03:01 hostdata drwxr-xr-x. 4 root root 4096 Nov 30 02:28 openshift.local.config drwxr-xr-x. 103 root root 4096 Nov 30 02:30 openshift.local.pv drwxr-xr-x. 4 root root 4096 Nov 30 02:29 openshift.local.volumes [docker@minishift ~]$ ls -l /usr/local/bin/ total 16 -rwxr-xr-x. 1 root root 2707 Oct 9 08:05 minishift-cert-gen -rwxr-xr-x. 1 root root 5285 Oct 9 08:05 minishift-handle-user-data -rwxr-xr-x. 1 root root 657 Oct 9 08:05 minishift-set-ipaddress

minishift配下はそれぞれ以下のものが格納されています。

hostdata- etcdのデータ

openshift.local.config- OpenShiftの設定ファイル群

openshift.local.pv- hostPath指定で作成されたPV

openshift.local.volumes- podにマウントされるVolume類

openshift.local.pvの下にregistryというのがあるのですが、対応するPVもdocker-registry用のPVCもないようです。作りかけか、何か変わったのかな。

これで大体の構成は把握できたのでおしまい。

minishiftでOpenShift Origin v3.7.0を利用する

OpenShift 全部俺 Advent Calendar 2017

11/29日US時間にOpenShift Origin 3.7とOpenShift Container Platform 3.7がリリースされたので、minishift v1.9.0を使ってOpenShift Origin v3.7.0をセットアップしたいと思います。

minishiftはシングルノードVMのOpenShift環境を起動するツールです。以下のインストールガイドに記述されているように、VMを立ち上げるので事前にOS毎に異なるそれのセットアップが必要です。

https://docs.openshift.org/3.11/minishift/getting-started/installing.html

Linux(Fedora 26)のKVMを利用するセットアップだとこのような感じです。例ではバイナリは/usr/binに直接置いています。WindowsやMacの人は上のURLからそれぞれのセットアップを参照してください。

curl -LO https://github.com/minishift/minishift/releases/download/v1.9.0/minishift-1.9.0-linux-amd64.tgz sudo tar xf minishift-1.9.0-linux-amd64.tgz --strip=1 -C /usr/bin minishift-1.9.0-linux-amd64/minishift sudo curl -L https://github.com/dhiltgen/docker-machine-kvm/releases/download/v0.7.0/docker-machine-driver-kvm -o /usr/local/bin/docker-machine-driver-kvm sudo chmod +x /usr/local/bin/docker-machine-driver-kvm sudo dnf install libvirt qemu-kvm -y sudo usermod -a -G libvirt <username> newgrp libvirt

これでminishiftを実行するとOpenShift Origin v3.7.0環境が立ち上がります。

$ minishift start --openshift-version v3.7.0 --iso-url centos --cpus 4 --memory 4GB --disk-size 40GB

-- Checking if requested hypervisor 'kvm' is supported on this platform ... OK

-- Checking if KVM driver is installed ...

Driver is available at /usr/local/bin/docker-machine-driver-kvm ...

Checking driver binary is executable ... OK

-- Checking if Libvirt is installed ... OK

-- Checking if Libvirt default network is present ... OK

-- Checking if Libvirt default network is active ... OK

-- Checking the ISO URL ... OK

-- Starting profile 'minishift'

-- Starting local OpenShift cluster using 'kvm' hypervisor ...

-- Minishift VM will be configured with ...

Memory: 4 GB

vCPUs : 4

Disk size: 40 GB

Downloading ISO 'https://github.com/minishift/minishift-centos-iso/releases/download/v1.3.0/minishift-centos7.iso'

323.00 MiB / 323.00 MiB [=============================================================================================================================================================================] 100.00% 0s

-- Starting Minishift VM ............... OK

-- Checking for IP address ... OK

-- Checking if external host is reachable from the Minishift VM ...

Pinging 8.8.8.8 ... OK

-- Checking HTTP connectivity from the VM ...

Retrieving http://minishift.io/index.html ... OK

-- Checking if persistent storage volume is mounted ... OK

-- Checking available disk space ... 1% used OK

-- Downloading OpenShift binary 'oc' version 'v3.7.0'

38.51 MiB / 38.51 MiB [===============================================================================================================================================================================] 100.00% 0s-- Downloading OpenShift v3.7.0 checksums ... OK

-- OpenShift cluster will be configured with ...

Version: v3.7.0

-- Checking `oc` support for startup flags ...

host-config-dir ... OK

host-data-dir ... OK

host-pv-dir ... OK

host-volumes-dir ... OK

version ... OK

routing-suffix ... OK

Starting OpenShift using openshift/origin:v3.7.0 ...

Pulling image openshift/origin:v3.7.0

Pulled 1/4 layers, 26% complete

Pulled 2/4 layers, 74% complete

Pulled 3/4 layers, 85% complete

Pulled 4/4 layers, 100% complete

Extracting

Image pull complete

OpenShift server started.

The server is accessible via web console at:

https://192.168.42.225:8443

You are logged in as:

User: developer

Password: <any value>

To login as administrator:

oc login -u system:admin

ocコマンドを利用するにはeval $(minishift oc-env)とsource <(oc completion bash)を実行してカレントシェルに反映する、という方法もあるのですが、面倒なので/usr/binに放り込んでしまいます。

$ eval $(minishift oc-env) $ source <(oc completion bash) $ sudo cp ~/.minishift/cache/oc/v3.7.0/linux/oc /usr/bin/ $ sudo sh -c "oc completion bash > /etc/bash_completion.d/oc" $ oc whoami developer $ oc status In project My Project (myproject) on server https://192.168.42.225:8443 You have no services, deployment configs, or build configs. Run 'oc new-app' to create an application.

minishiftはデフォルトで軽量のDockerホストOSであるboot2dockerのVMを立ち上げますが、SELinuxが無効になっていて動作が異なる部分があるなどコンテナ動作環境としては微妙なので--iso-url centosを指定して起動しています。

minishiftのヘルプを以下に記載しておきます。

$ minishift version

minishift v1.9.0+a511b25

$ minishift help

Minishift is a command-line tool that provisions and manages single-node OpenShift clusters optimized for development workflows.

Usage:

minishift [command]

Available Commands:

addons Manages Minishift add-ons.

config Modifies Minishift configuration properties.

console Opens or displays the OpenShift Web Console URL.

delete Deletes the Minishift VM.

docker-env Sets Docker environment variables.

help Help about any command

hostfolder Manages host folders for the OpenShift cluster.

ip Gets the IP address of the running cluster.

logs Gets the logs of the running OpenShift cluster.

oc-env Sets the path of the 'oc' binary.

openshift Interacts with your local OpenShift cluster.

profile Manages Minishift profiles.

ssh Log in to or run a command on a Minishift VM with SSH.

start Starts a local OpenShift cluster.

status Gets the status of the local OpenShift cluster.

stop Stops the running local OpenShift cluster.

update Updates Minishift to the latest version.

version Gets the version of Minishift.

Flags:

--alsologtostderr log to standard error as well as files

-h, --help help for minishift

--log_backtrace_at traceLocation when logging hits line file:N, emit a stack trace (default :0)

--log_dir string If non-empty, write log files in this directory

--logtostderr log to standard error instead of files

--profile string Profile name (default "minishift")

--show-libmachine-logs Show logs from libmachine.

--stderrthreshold severity logs at or above this threshold go to stderr (default 2)

-v, --v Level log level for V logs

--vmodule moduleSpec comma-separated list of pattern=N settings for file-filtered logging

Use "minishift [command] --help" for more information about a command.

$ minishift start --help

Starts a local single-node OpenShift cluster.

All flags of this command can also be configured by setting corresponding environment variables or persistent configuration options.

For the former prefix the flag with MINISHIFT_, uppercase characters and replace '-' with '_', for example MINISHIFT_VM_DRIVER.

For the latter see 'minishift config -h'.

Usage:

minishift start [flags]

Flags:

-a, --addon-env stringSlice Specify key-value pairs to be added to the add-on interpolation context.

--cpus int Number of CPU cores to allocate to the Minishift VM. (default 2)

--disk-size string Disk size to allocate to the Minishift VM. Use the format <size><unit>, where unit = MB or GB. (default "20GB")

--docker-env stringSlice Environment variables to pass to the Docker daemon. Use the format <key>=<value>.

--docker-opt stringSlice Specify arbitrary flags to pass to the Docker daemon in the form <flag>=<value>.

-h, --help help for start

--host-config-dir string Location of the OpenShift configuration on the Docker host. (default "/var/lib/minishift/openshift.local.config")

--host-data-dir string Location of the OpenShift data on the Docker host. If not specified, etcd data will not be persisted on the host. (default "/var/lib/minishift/hostdata")

--host-only-cidr string The CIDR to be used for the minishift VM. (Only supported with VirtualBox driver.) (default "192.168.99.1/24")

--host-pv-dir string Directory on Docker host for OpenShift persistent volumes (default "/var/lib/minishift/openshift.local.pv")

--host-volumes-dir string Location of the OpenShift volumes on the Docker host. (default "/var/lib/minishift/openshift.local.volumes")

--http-proxy string HTTP proxy in the format http://<username>:<password>@<proxy_host>:<proxy_port>. Overrides potential HTTP_PROXY setting in the environment.

--https-proxy string HTTPS proxy in the format https://<username>:<password>@<proxy_host>:<proxy_port>. Overrides potential HTTPS_PROXY setting in the environment.

--insecure-registry stringSlice Non-secure Docker registries to pass to the Docker daemon. (default )

--iso-url string Location of the minishift ISO. Can be an URL, file URI or one of the following short names: [b2d centos]. (default "b2d")

--logging Install logging (experimental)

--memory string Amount of RAM to allocate to the Minishift VM. Use the format <size><unit>, where unit = MB or GB. (default "2GB")

--metrics Install metrics (experimental)

--no-proxy string List of hosts or subnets for which no proxy should be used.

-e, --openshift-env stringSlice Specify key-value pairs of environment variables to set on the OpenShift container.

--openshift-version string The OpenShift version to run, eg. v3.6.0 (default "v3.6.0")

--password string Password for the virtual machine registration.

--public-hostname string Public hostname of the OpenShift cluster.

--registry-mirror stringSlice Registry mirrors to pass to the Docker daemon.

--routing-suffix string Default suffix for the server routes.

--server-loglevel int Log level for the OpenShift server.

--skip-registration Skip the virtual machine registration.

--skip-registry-check Skip the Docker daemon registry check.

--username string Username for the virtual machine registration.

--vm-driver string The driver to use for the Minishift VM. Possible values: [virtualbox kvm] (default "kvm")

Global Flags:

--alsologtostderr log to standard error as well as files

--log_backtrace_at traceLocation when logging hits line file:N, emit a stack trace (default :0)

--log_dir string If non-empty, write log files in this directory (default "")

--logtostderr log to standard error instead of files

--profile string Profile name (default "minishift")

--show-libmachine-logs Show logs from libmachine.

--stderrthreshold severity logs at or above this threshold go to stderr (default 2)

-v, --v Level log level for V logs

--vmodule moduleSpec comma-separated list of pattern=N settings for file-filtered logging

Red Hat Container Development Kitにもminishiftが含まれています。こちらは別の機会に。